How AI is being abused to create child sexual abuse material

Falha ao colocar no Carrinho.

Falha ao adicionar à Lista de Desejos.

Falha ao remover da Lista de Desejos

Falha ao adicionar à Biblioteca

Falha ao seguir podcast

Falha ao parar de seguir podcast

-

Narrado por:

-

De:

Sobre este áudio

Images of child sexual abuse generated by artificial intelligence are on the rise.

Australia’s eSafety Commissioner, Julie Inman Grant, says 100,000 Australians a month have accessed an app that allows users to upload images of other people – including minors – to receive a depiction of what they would look like naked.

Predators are known to share know-how to produce and spread these images – and in Australia, the AI tools used to create this material are not illegal.

All the while, Julie Inman Grant says not a single major tech company has expressed shame or regret for its role in enabling it.

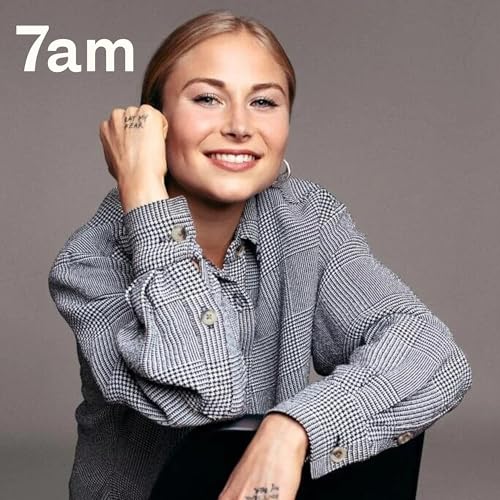

Today, advocate for survivors of child sexual assault and director of The Grace Tame Foundation, Grace Tame, on how governments and law enforcement should be thinking about AI and child abuse – and whether tech companies will cooperate.

If you enjoy 7am, the best way you can support us is by making a contribution at 7ampodcast.com.au/support.

Socials: Stay in touch with us on Instagram

Guest: Advocate for survivors of child sexual assault and director of The Grace Tame Foundation, Grace Tame

See omnystudio.com/listener for privacy information.